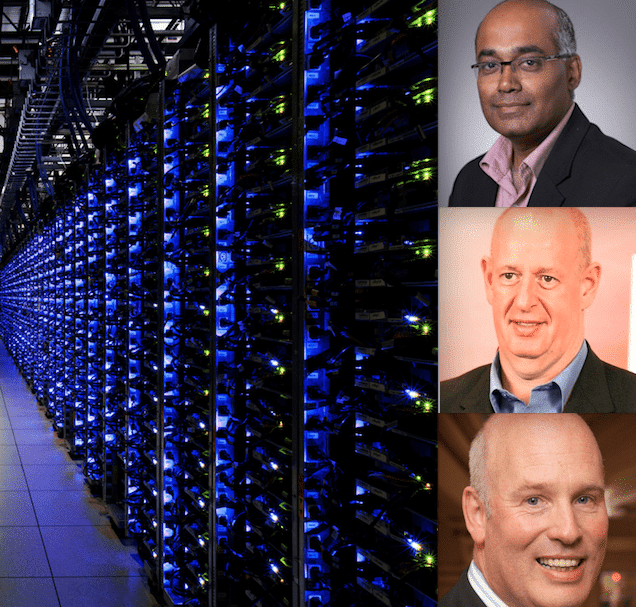

High-performance computing is not only about running advanced applications but it is leading to transformations in the way world works. Michael Keegan International Business Leader, Chairman and Board Member, Andy Stevenson Head of Middle East, Turkey, and India, Managing Director for India and Ravi Krishnamoorthi Sr. Vice President & Head of Business Consulting Fujitsu reveal the transformational roadmap with the help of HPC that even governs functioning of all entities in a connected world works while talking to Shanosh Kumar From EFY group

Q. How does High performance computing address classic problems of automation?

A. When we look at most technologically advanced nations, HPC is used to bring in private sector research to deliver solutions. Let us take an example of a seaport in Singapore. HPC’s there helps to optimise the flow of cargo in and out of the ports while interacting directly with ships and guiding them. They also make sure that the inflow and outflow of cargo is taken care of by analysing traffic patterns near the port. We can see a real cross over of research over the impact.

Q. What are the infrastructural demands required to support smart environments?

A. Intelligent analysis of existing data that exists in form of structured and unstructured data can now be sourced from multiple devices and mediums. This can help the analytics engines to make knowledgeable decision for us. Now that is intelligent analytics over big data.

Q. Can HPC address India specific problems?

A. Michael: This is a big growth area in India, considering the fact that there is more data out there and the requirements to gather intelligent information and insightfulness backed by data is driving an increase in high performance super compute capabilities. This becomes the fundamental engine that underpins the digital transformation.

There are some problems that are present with India perspective. Last mile connectivity, front end security, presence of unstructured data than structured data and legacy systems in operation are some of them. If India needs to realise its smart city initiatives and become digital India; infrastructure connectivity, infrastructural demand needs to be sorted, says Ravi.

Q. What factors are pushing corporations to link up every process to cloud platforms and HPC?

A. Andy: Timing is everything. The big macroeconomic factors with respect to HPC are that the price of computing is coming down while the ability to process structured and unstructured data becomes cheaper. The connectivity and bandwidths are improving all the time. Also we have a population that is more and more impatient for information to make more intelligent decision based on data and not on belief.

Ravi: To add on to it, consumer’s mind-set revolves around mobility, access and dependence of technology drives the whole data spectrum. This is making whole lot of processes automated. Interoperability will happen.

Q. What is your take on interoperability?

A. Andy: Open standards make more room for interoperability. There used to be very few true open standards. Software innovations and number of people including those who run big business now are looking for open standard software as a key asset. The age of proprietary software is going away. Transformation will take place when a farmer or if somebody uses this information through a smart phone line device with compute power help somewhere.

Q. How accurate are HPC systems post their operational deployment?

A. Andy: Looking across the spectrum, HPC has some problem domains that do not yield themselves to end point devices. For example, if we are trying to measure the impact of weather for one kilometre the algorithms and models today can gauge up to a kilometre. Meteorological department of India is trying to take the next generation of algorithm models down to less than 100 meters grid on a global scale. Hence we can imagine the amount of insight generated as the analytics engine takes in to account ocean currents, temperature, convection currents and all sorts of different parameters.

Q. Would future of compute power lie in a distributed environment of in the palm of our hands with respect to shrinking size?

A. Michael: With respect to HPC, the platform has been part of various deployments across the world. Meteorological department, tsunami alert systems are all using this platform to run simulations and predict. Going forward Spark HPC platform will collaborate with ARM based technology and we will be looking to provide the successive roadmaps, particularly in the mobile space and this collaboration would be the future direction.

Q. What is your take on security on HPC?

A. Michael: Approach to security depends on what the business or the consumer services are made available. We have learned a lot from the banking industry, like using internet security protocols for safe payments.

We also see a rise in cybercrimes. The first is the insider threat like fraud by people who have privileged access to be able to cause loss of money or data together. We can protect those using process by controlling the access and assessing risk. These are major threats considering customer stand point. Today we can limit access etc. to certain people by just giving them a wearable.