Digital Twin is an upcoming technology that is considered to be the next big innovation. However, there is more to Digital Twin than simply providing simulation.

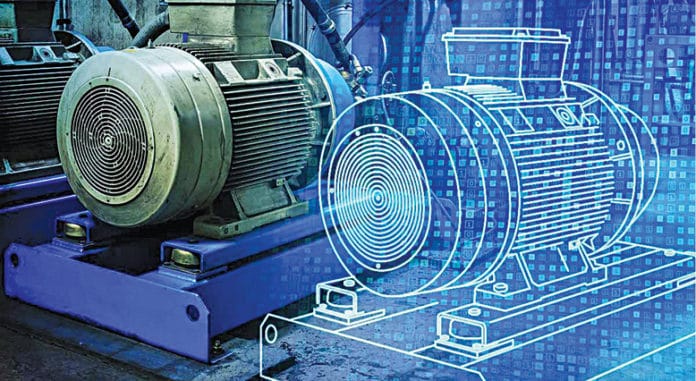

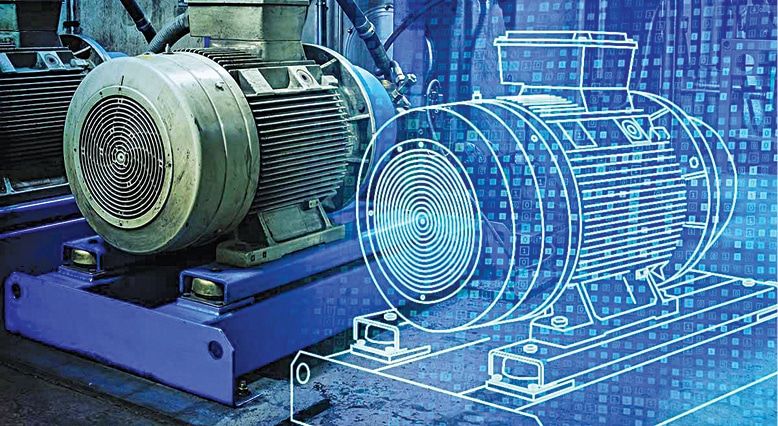

When we hear the word ‘twin,’ the image of a human twin possessing identical features such as the same eyes, lips, and nose comes to our mind. Digital Twin is similar to that. It is defined as the virtual representation of a physical object or system across its entire lifecycle, which uses real-time data or data from other sources to enable learning, reasoning, and dynamic recalibration for improved decision making.

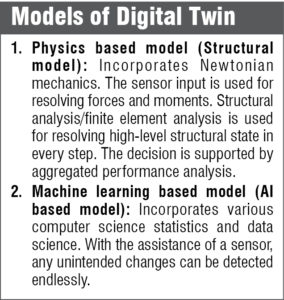

As Digital Twin is constantly evolving, its definition varies from company to company. For instance, GE states that Digital Twin is a software representation of a physical asset, system, or process designed to detect, prevent, predict, and optimise through real-time analytics to deliver business value. In simple terms, Digital Twin is defined as a virtual model of a physical thing. That thing could be a car, tunnel, bridge, jet engine, or anything else attached with multiple sensors whose function is to carry out data connection, which can be mapped to the virtual model.

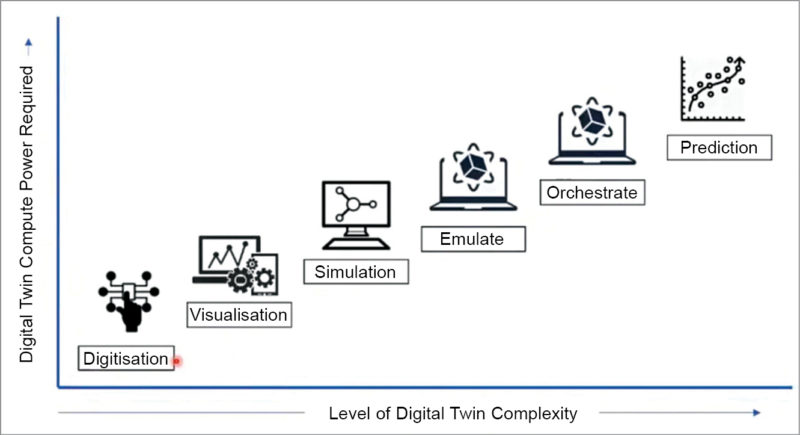

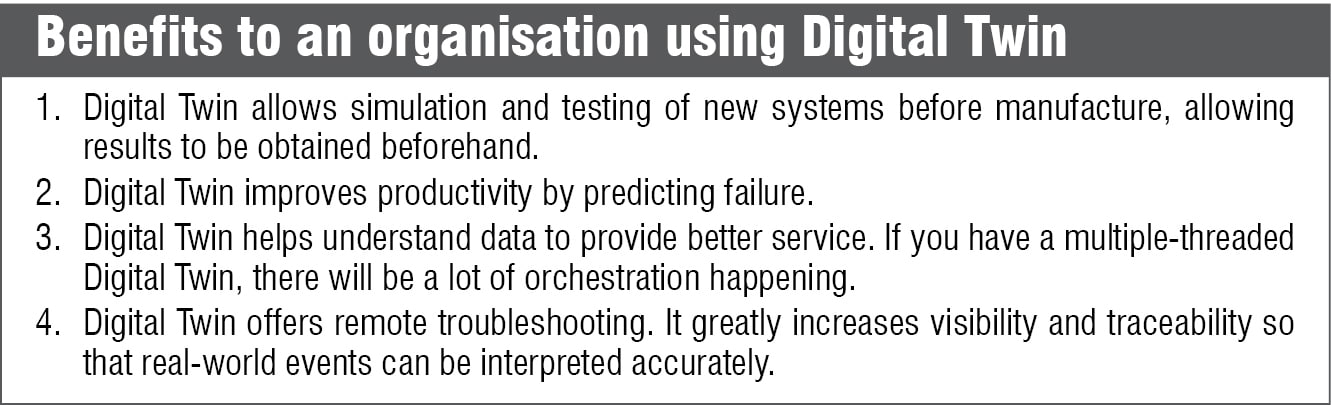

But is Digital Twin all about simulation? Are physical world scenarios replicated in the digital world? Not exactly. Digital Twin initially starts as a simulation but goes on to perform real-time updates, unlike digital simulation. Results can be obtained by running, testing, and conducting assessments on a simulated version of a physical asset. Simulation is static and so does not know how the actual thing will behave in the real world. Parameters always need to be fed in the simulator to make it more realistic.

In short, a Digital Twin is the virtual representation of a system across its entire lifecycle. This includes its designing, operation, and production.

Early use of Digital Twin

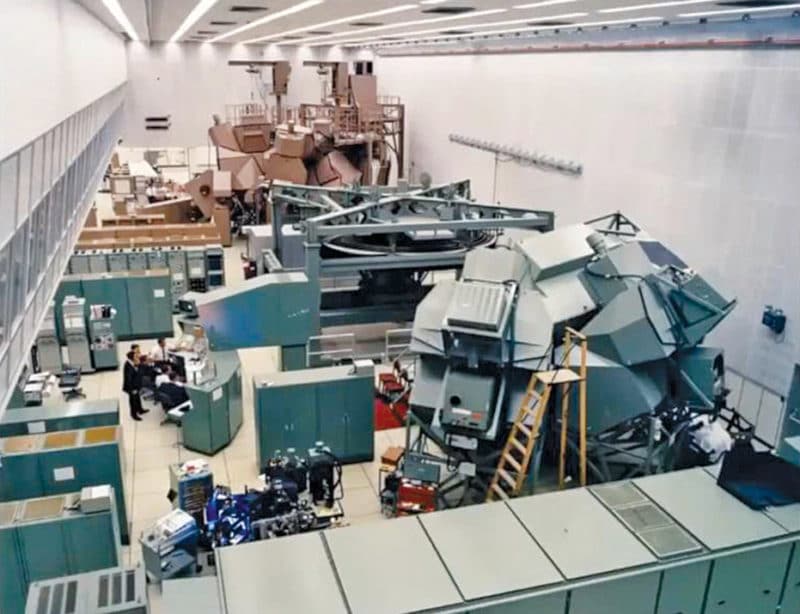

In the year 1970, NASA successfully launched the spacecraft Apollo 13 into space. However, due to some technical issues, it could not complete its intended mission and had to return to Earth. It is said that NASA had used the concept of Digital Twin back then at a time when IoT did not even exist.

[emaillocker]The story goes like this: Before the space mission began, a simulator was built to train the chosen astronauts to face any emergency. On the day of the big event, the astronauts aboard Apollo 13 had access to only its telemetry data. Fortunately, the same telemetry data was accessible by the ground station on the Earth as well. Despite being far apart by several thousand kilometres, communication was established wherein exchange of messages took place thanks to virtual representation. Before giving various instructions, verification was done. If the results were satisfactory, only then communication ensued. This constant contact played a big role in bringing the Apollo 13 spacecraft safely back to Earth.

Since there was no use of IoT, it could not technically be called a Digital Twin. But it qualifies as an example of Digital Twin as it combined multiple things for communication purposes. This made the whole system capable of multitasking and responsive.

In 1977, several flight simulators were introduced, which allowed pilots to practice their flight techniques. In 1982, AutoCAD was launched, which eased the design of 2D and 3D models in the times to come.

In 2002, Dr Grieves introduced the first concept of Digital Twin and in 2011, NASA published several papers on the subject. In 2015, GE became the first company to take the Digital Twin initiative. After 2015, several industrial organisations started adopting Digital Twin as part of their operations.

IoT and its shortcomings

As we all know, IoT stands for the Internet of Things. Since we humans have been using the Internet for a long time to communicate with each other, the term IoT is also referred to as the Internet of People. The word ‘things’ generally refers to physical objects such as a lightbulb, refrigerator, or a machine in a factory. Anything that can communicate with other entities via the Internet can be a part of the Internet of Things.

IoT comprises sensors, actuators, and controllers. Sensors are electromechanical or electrochemical devices, which sense physical entities and convert them into electrical signals.

Actuators are devices that receive those electrical signals and perform a physical action. And finally, the controller, which acts as the brain, controls both sensors and actuators. It provides electrical signals to the actuator and reads electrical signals from the sensor.

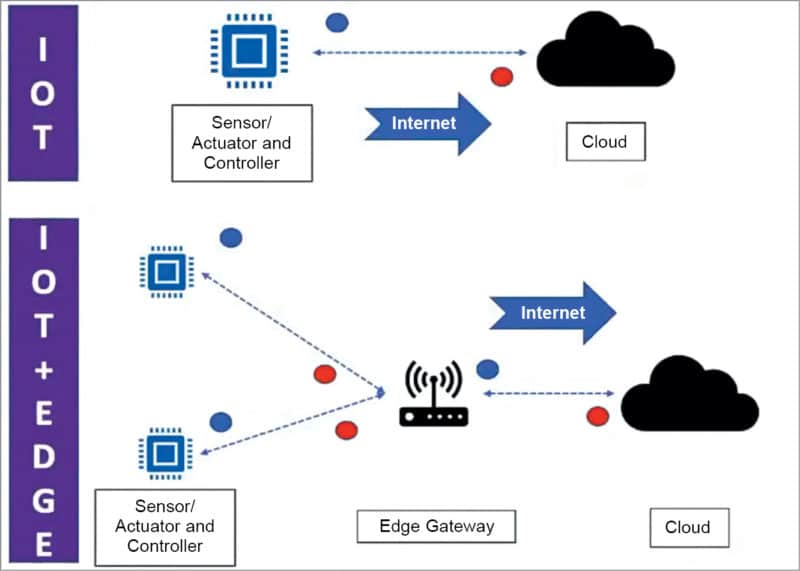

When IoT devices communicate information in conjunction, the data gets stored in the cloud. IoT architecture may look simple but it has several drawbacks. Absence of the Internet connection, for instance, can lead to catastrophic failures as the sensor and the actuator won’t be able to communicate with the cloud, causing data loss.

Also, if every individual sensor tries to communicate with the cloud at the same time, the transmission of many packets will interfere or slow down the path from the sensor or controller to the cloud. To avoid such scenarios, edge computing is used.

When edge computing and IoT work together, the sensor and controller do not talk to the cloud directly. Instead, communication takes place through an edge gateway, which aggregates all the data and transfers it to the cloud. Here, no Internet connection is required between the sensor/actuator and the controller and the edge gateway.

This way, response is obtained even when there is no Internet connection. This can reduce the latency and the number of messages being sent to the cloud. The edge gateway can process data locally and send only important and meaningful information to the cloud.

Where Digital Twin meets IoT

Digital Twin without IoT is nothing but a static simulation model. With the addition of Digital Twin we can get real-time data from physical things via IoT. That makes the entire thing a living-learning model.

Let us understand this from the perspective of a car. To simulate a car in a difficult situation and examine how it will behave, we need to feed in some parameters. But the actual result is sometimes different from that obtained from the simulation. Instead, if we connect particular sensors, which in turn are getting directly displayed over the Digital Twin, then that will give a whole lot more realistic data.

Where Digital Twin meets AI

Digital Twin is a continuously learning system, powered by various algorithms.

Let us assume that we have several pumps in our factory. To make Digital Twin models of each pump, we first need to make 3D models of them. The engineer will work to collect the physical, mechanical, electrical, and other parameters to make a 3D design. And after that the actual objects will be fitted with sensors, which will deliver data to the digital replicas.

And this Twin will display the live data and store it in a database (as historical data). This data can be used to train a model.

Let us now assume that hundreds of pumps are working and being tracked for the past one or two years. So, every time a pump gets damaged, some physical parameter gets changed. So, by having data, a model can be created that can predict any pump malfunctions. If it is repeating the same phenomena, which occurred earlier, a prediction can be made that this pump will not function well after some time. So, before the malfunction occurs, parts can be replaced or repaired, preventing any losses.

Jet engine case study

Suppose there is a jet engine that has sensors and is pushing data to its Digital Twin. If the jet engine has been running for some time, then chances are some damages might have occurred. Based on the previous historical database and the predictive model, Digital Twin will try to predict the estimated damage that has occurred in a particular part. But when the engine is physically inspected, there might not be the same scenario because predictive models are not 100% accurate.

That’s a key point to understand while using Digital Twin. Therefore, to extract the actual damage that has happened, those references will need to be calculated once again and fed to the model to make it better. It is an iterative process. Slowly, the model will improve and its accuracy of prediction will become high.

This use case is also important for wind turbines. Digital Twin can predict the correct alignment of the wind turbine, that is, in which direction the wind turbine will get the most power from wind. Not only that, it can also tell at what time the predictive maintenance should happen.

Future

It is estimated that companies will be investing up to US$1.1 trillion in IoT by 2023. There will be 1.9 billion 5G cellular subscriptions by 2024, which will push the use of IoT to a huge extent. The total economic impact of IoT could range anywhere between US$4 trillion to US$11 trillion by 2025.

According to Market & Markets, the global Digital Twin market was valued at US$3.1 billion in 2020. But it is projected to reach US$48.2 billion by 2026, which would be a huge growth. And post-Covid that will increase even more rapidly.

In summary, the concept of Digital Twin has been there since long time. Now that we are noticing its potential and what we can achieve in collaboration with IoT and AI, it is gradually gaining acceptance by different industries. Digital Twin holds a big potential. Going by the numbers, it will soon find widespread applicability.

The article is based on the talk Digital Twin & IoT by Arnab Ghosh, Developer and Researcher at Accenture Solutions, India that was presented at the February edition of Tech World Congress and India Electronics Week 2021.[/emaillocker]